AI pilots are deceptive. A proof-of-concept chatbot trims call times, a fraud model spots anomalies in test data, or a small gen-AI service delights a focus group. The pilot runs on a few GPUs, with API credits and a small team. Budgets look reasonable, adoption looks near.

But here’s the catch: pilots almost always understate the true cost of AI. Moving from a sandbox experiment to an enterprise-grade AI platform is when the real financial surprises hit.

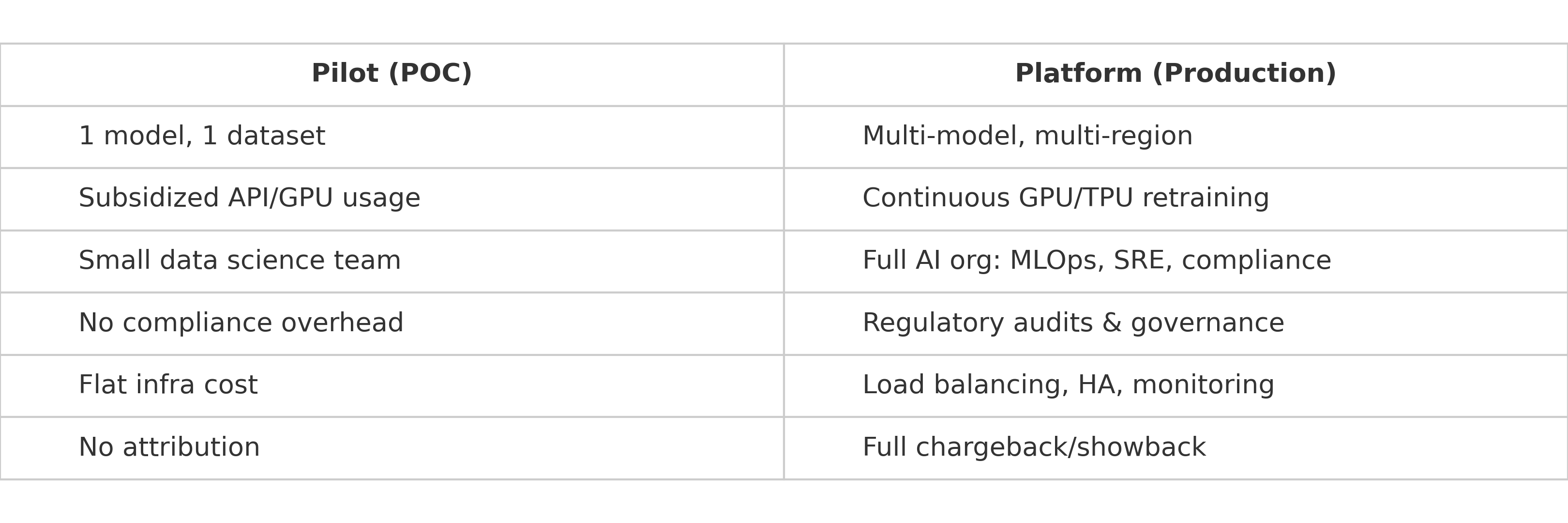

Pilots are deceptively cheap

Pilots are built for experimentation:

- Narrow scope

One dataset, one region, time-bounded and with a limited set of initial users or beta customers. - Lightweight infrastructure

A small GPU cluster, a limited amount of direct API calls, no SLAs for high-availability or auto-scaling. - Minimal overhead

Small development team, no compliance audits, limited monitoring, no ongoing data preparation or retraining.

At first glance, these pilot costs seem small, so it’s natural to think they will scale simply when realized in production. However the project does not scale uniformly as expected – adding more scale, whether that is in models, regions, or users, creates exponential cost increases around training, versioning, data management, and personnel.

Costs multiply in production

Once you move into production, the gaps appear:

- Infrastructure

Real-time inference requires redundancy and often multi-region replication. Retraining, fine-tuning, data storage, and token costs multiply rapidly as models are refreshed and the user base grows. - Operations

24/7 uptime, auto-scaling, high-availability, monitoring, and incident response. - Compliance

Handling live customer data means audits, uptime and performance SLAs, and governance controls. - Developer costs

MLOps engineers, SREs, compliance leads, and product managers expand the payroll beyond the small initial team. - Metering & Attribution

Pilots don’t need strict tagging, but scaled platforms must show which business unit or team is responsible for which spend.

These new costs can translate to a 3-7x increase in project TCO from pilot to production. Even worse, each cost area outlined above is generally managed by different people or teams. The end result is a sprawling, complex cost picture where no one has a single unified view across the full production workload from end to end.

Side-by-side comparison

Bridging the cost visibility gap with Amberflo

This is where Amberflo comes in.

Amberflo provides the real-time cost transparency needed to turn scaling AI into a manageable, predictable process. Instead of chasing costs after the fact, Amberflo enables:

- Unified view of AI costs

Track spend across tokens, GPUs, APIs, storage, and data pipelines in a single view. - Accurate cost allocation

Tag and allocate costs to business units, projects, or AI lifecycle phases, even when usage is complex or distributed across teams and locations. - Real-time metering and alerts

Move from monthly billing surprises to live dashboards tracking consumption and spend, with real-time alerts to notify the moment budgets or usage milestones are met. - Cross-cloud, cross-phase coverage

Whether it’s data prep, training, inference, or agent usage, and whether it’s in a public cloud, private cloud, or on-prem data center, Amberflo shows the complete picture.

With real-time usage tracking and cost calculations across any resource or location, Amberflo helps monitor and control AI costs at any scale, from simple pilot projects all the way to enterprise-grade production workloads.

.svg)

.svg)

.svg)